This is the second in a series of posts seeking to understand the effect of social media on political polarization. In the first part, I argued that aggregate trends in polarization and news consumption don't map well to the rise of social media, and thus social media is unlikely to be the primary direct driver of polarization in the U.S. (within media, cable news is a more likely culprit). However, social media could still have important effects on polarization that we'd like to understand. To do this, I'm going to drill into "micro-level" data: the actual content of facebook users' news feeds. In a later post I'll tie this back to the effect on those users' political attitudes.

The micro-level data comes primarily from two sources: feed data collected by Levy (2020) using a browser extension, and a larger but less curated dataset released by Comscore. I'll describe and analyze this in more detail below. [Disclosure: Ro'ee Levy is a collaborator.]

Overall, I conclude that the Facebook news feed is on average more aligned with a users’ political orientation (compared to web search or direct browsing), but this statement alone masks important complexity. Facebook is more likely to show users content that strongly confirms or strongly opposes their views, mainly at the expense of neutral sources. In a bit more detail:

- Users are 3x more likely to see news from pro-attitudinal sources than from counter-attitudinal sources, but this is also true for other channels of news consumption such as direct search. ("Pro-attitudinal" means that the news source has the same political leaning as the reader, i.e. a Democrat reading The Washington Post.)

- However, Facebook users are more likely to see extreme content: 40% more likely for pro-attitudinal sources and 20% for counter-attitudinal.

- The political lean comes mainly from what Pages users choose to Like: posts from friends are more extreme than other sources, but also more likely to show conflicting views.

Facebook vs. Other Sources

Let's start by understanding how news on Facebook feeds compares to other online browsing behavior. I'll separate this into three distinct channels: “Direct”, “Social”, and “Search”. Direct refers to going to a website like nytimes.com and reading the articles; search to search engines like Google; and Social to social media, which is primarily Facebook.

My data comes from Levy (2020), who recruited users via Facebook ads to install a browser plugin that would monitor their browsing, and also asked them questions about their political leaning. He also analyzes another dataset collected by Comscore, but that dataset doesn't measure the partisan lean of users. Instead, Levy (following previous researchers) imputes partisan lean on the Comscore data using zip code, which is a bit sketchy, so I'll mostly focus on the plugin data.

Levy includes some interesting descriptive data in Tables 4a and 4b of his paper. There he constructs various measures of political segregation, and by most of these Social is about 50% more segregated than Direct and Search (which are comparable). However, the most interesting part is a breakdown of articles into 5 categories, based on whether articles are (strongly/weakly) in (dis-)agreement with a users’ political leaning. These paint a nuanced picture, and I've reproduced them below:

Levy Browser Extension Data (Table 4b of paper)

| Pro+ | Pro | Mod | Counter | Counter+ | |

|---|---|---|---|---|---|

| Non-FB (86%) | 0.190 | 0.429 | 0.223 | 0.131 | 0.025 |

| FB (14%) | 0.264 | 0.399 | 0.183 | 0.127 | 0.030 |

Comscore Data (sketchy, for reasons discussed above; Table 4a)

| Pro+ | Pro | Mod | Counter | Counter+ | |

|---|---|---|---|---|---|

| Direct (50%) | 0.067 | 0.303 | 0.365 | 0.219 | 0.045 |

| Search (37%) | 0.117 | 0.299 | 0.284 | 0.220 | 0.081 |

| Social (5%) | 0.147 | 0.330 | 0.193 | 0.235 | 0.096 |

Users are both more likely to see extreme pro and extreme counter content on social media. In Levy’s data, there is a 40% increase in extreme pro and 20% increase in extreme counter content, with the main loser being moderate news. The Comscore data shows similar but stronger effects: users are 50% more likely to see Counter+ and 100% more likely to see Pro+. Search is somewhere in-between Direct and Social in terms of this effect, suggesting that data-driven optimization generally leads to more extreme news consumption.

On the other hand, the overall fraction of counter-attitudinal news (after removing moderate news) is the same for all channels (close to 20% for both FB and non-FB in Levy, close to 42% for all channels in Comscore). I believe the Levy data more than Comscore, so I'll average this out to say that for all channels, users are about 3x as likely to see pro-attitudinal as counter-attitudinal news.

To contextualizes the extremism of Facebook-recommended news: 57% of individuals consume news from FB that is more conservative than WSJ or more liberal than WaPo, compared to 39% outside FB. Similarly, 19% of individuals consume news more extreme than Boston Globe / Fox News, vs. 5% outside FB. This comes from Figure 3 of Levy (2020).

Interestingly, Facebook also has a left-leaning skew relative to other sources of news, contrary to some conventional wisdom.

Where Do Slanted Articles Come From?

Levy’s data also separates the Facebook feed into content from friends and from Pages. It turns out that most of the ideological segregation comes from Pages:

Levy Feed Data by Source (from Table 4b; also includes an overall measure of Segregation)

| Segregation | Pro+ | Pro | Mod | Counter | Counter+ | ||

|---|---|---|---|---|---|---|---|

| Friends (59.5%) | 0.208 | 0.236 | 0.391 | 0.197 | 0.140 | 0.036 | |

| Pages (40.5%) | 0.290 | 0.345 | 0.376 | 0.153 | 0.110 | 0.020 | |

| Non-FB (86%) | 0.194 | 0.190 | 0.429 | 0.223 | 0.131 | 0.025 |

In particular, we see that friends are only slightly more likely to give Pro+ content and almost 1.5x as likely to give Counter+ content, but Pages are less likely to give Counter+ content.

This is a pretty interesting take-away, but I'm a bit uncertain what to conclude from it. If Pages didn't exist, would Facebook's algorithm put more effort into skewing posts from Friends? Or is it that Pages are more linked to advertising revenue and therefore more heavily filtered to agree with users? If anyone has good explanations I'd be interested to hear from you.

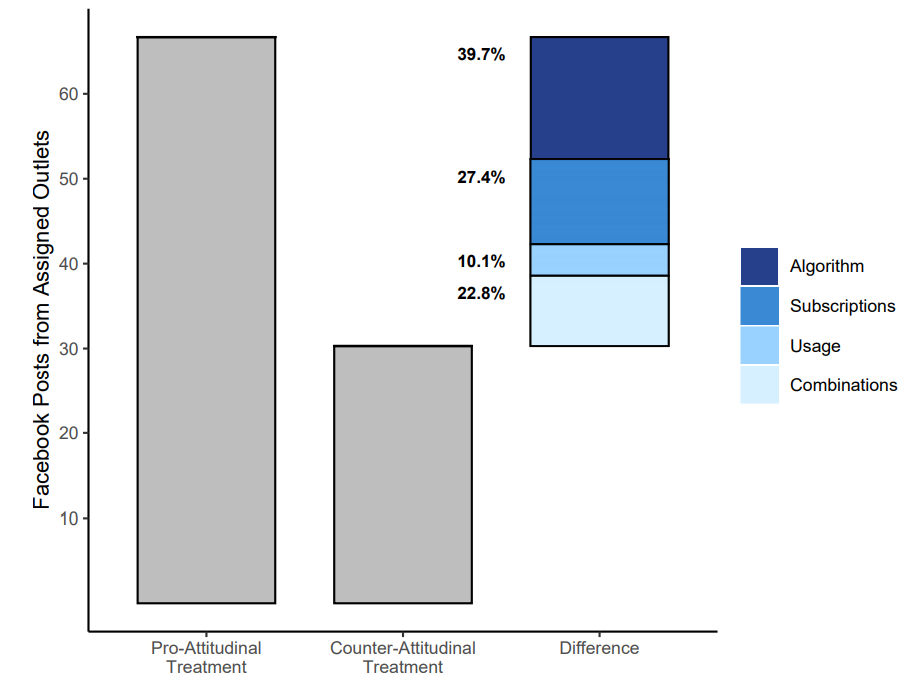

Levy's Figure 8 provides an alternate look at this, based on nudging users to Like either a pro- or counter-attitudinal news outlet (more on this in the next post):

Figure 8 from Levy (2020)

The way to interpret this figure is that, after the nudge, a user will see a little over twice as many stories from that outlet if it is pro-attitudinal vs. counter-attitudinal (note this is better than the baseline 3:1 ratio). Where does the difference come from?

- 40% comes from the algorithm showing more posts in the pro-attitudinal setting;

- 27% comes from pro-attitudinal users being more likely to click Like in the first place;

- 10% comes from counter-attitudinal users decreasing their Facebook usage.

The remainder is non-linear interactions between these effects. These calculations are based on somewhat speculative assumptions, so I wouldn't take them too literally (especially the 10% reduction in usage). But I'd trust the rough ordering, suggesting that both user behavior and algorithm behavior matter, with algorithm behavior mattering a bit more.

How Much News Comes from Facebook?

Finally, let's estimate how much news actually comes from Facebook, since it's smaller than you might think. My guess is that about 10% of political news is referred through Facebook. Comscore finds 4% while Levy finds 14% (but this is an obvious overestimate since all people in the sample are Facebook users). But Levy also notes that “Parsely (2018) tracks pages viewed in thousands of sites and estimates that 16% of traffic related to Donald Trump in April-May 2018 is from social media”. These estimates vary widely but average out to around 10%.

Conclusion

Overall, my take is that the primary issue with Facebook is not the slant but rather the extremism of news on Facebook's feed. People are seeing extreme news that confirms their opinions, but they see extreme news that contradicts their opinions as well. It's not clear how helpful the latter is: I'd imagine it's easy to reject those articles, and it could cause people to dig in further to previously held beliefs. Moderate counter-attitudinal news, in contrast, could be an effective intervention to decrease polarization, and we'll see that this is indeed the case in the next part of the series.