My last few posts have been rather abstract. I thought I'd use this one to go into some details about the actual system we're working with.

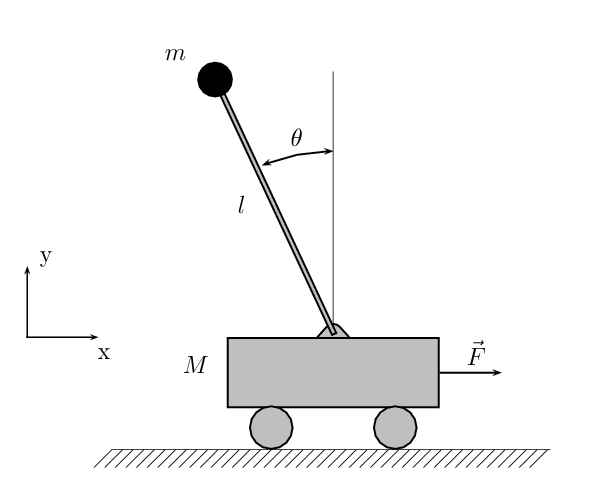

As I mentioned before, we are looking at a cart pole in a water tunnel. A cart pole is sometimes also called an inverted pendulum. Here is a diagram from wikipedia:

The parameter we have control over is F, the force on the cart. We would like to use this to control both the position of the cart and the angle of the pendulum. If the cart is standing still, the only two possible fixed points of the system are $\theta = 0$ (the bottom, or "downright") and $\theta = \pi$ (the "upright"). Since $\theta = 0$ is easy to get to, we will be primarily interested with getting to $\theta = \pi$.

For now, I'm just going to worry about the regular cart pole system, without introducing any fluid dynamics. This is because the fluid dynamics are complicated, even with a fairly rough model (called the Quasi-steady Model), and I don't know how to derive them anyway. Before continuing, it would be nice to have an explicit parametrization of the system. There are two position states we care about: $x$, the cart position; and $\theta$, the pendulum angle, which we will set to $0$ at the bottom with the counter-clockwise direction being positive. I realize that this is not what the picture indicates, and I apologize for any confusion. I couldn't find any good pictures that parametrized it the way I wanted, and I'm going to screw up if I use a different parametrization than what I've written down.

At any rate, in addition to the two position states $x$ and $\theta$, we also care about the velocity states $\dot{x}$ and $\dot{\theta}$, so that we have four states total. For convenience, we'll also name a variable $u := \frac{F}{M}$, so that we have a control input $u$ that directly affects the acceleration of the cart. We also have system parameters $M$ (the mass of the cart), $g$ (the acceleration due to gravity), $l$ (the length of the pendulum arm), and $I$ (the inertia of the pendulum arm). With these variables, we have the following equations of motion:

$\left[ \begin{array}{c} \dot{x} \ \dot{\theta} \ \ddot{x} \ \ddot{\theta} \end{array} \right] = \left[ \begin{array}{c} \dot{x} \ \dot{\theta} \ 0 \ -\frac{mgl\sin(\theta)}{I} \end{array} \right] + \left[ \begin{array}{c} 0 \ 0 \ 1 \ -\frac{mg\cos(\theta)}{I} \end{array} \right] u$

You will note that the form of these equations is different from in my last post. This is because I misspoke last time. The actual form we should use for a general system is

$\dot{x} = f(x) + B(x)u,$

or, if we are assuming a second-order system, then

$\left[ \begin{array}{c} \dot{q} \ \ddot{q} \end{array} \right] = \left[ \begin{array}{c} \dot{q} \ f(q,\dot{q}) \end{array} \right] + B(q,\dot{q}) u.$

Here we are assuming that the natural system dynamics can be arbitrarily non-linear in $x$, but the effect of control is still linear for any fixed system state (which, as I noted last time, is a pretty safe assumption). The time when we use the form $\dot{x} = Ax + Bu$ is when we are talking about a linear system --- usually a linear time-invariant system, but we can also let $A$ and $B$ depend on time and get a linear time-varying system.

I won't go into the derivation of the equations of motion of the above system, as it is a pretty basic mechanics problem and you can find the derivation on Wikipedia if you need it. Instead, I'm going to talk about some of the differences between this system and the underwater system, why this model is still important, and how we can apply the techniques from the last two posts to get a good controller for this system.

Differences from the Underwater System

In the underwater system, instead of having gravity, we have a current (the entire system is on the plane perpendicular to gravity). I believe that the effect of current is much the same as the affect of gravity (although with a different constant), but that could actually be wrong. At any rate, the current plays the role that gravity used to play in terms of defining "up" and "down" for the system (as well as creating a stable fixed point at $\theta = 0$ and an unstable fixed point at $\theta = \pi$).

More importantly, there is significant drag on the pendulum, and the drag is non-linear. (There is always some amount of drag on a pendulum due to friction of the joint, but it's usually fairly linear, or at least easily modelled.) The drag becomes the greatest when $\theta = \pm \frac{\pi}{2}$, which is also the point at which $u$ becomes useless for controlling $\theta$ (note the $\cos(\theta)$ term in the affect of $u$ on $\ddot{\theta}$). This means that getting past $\frac{\pi}{2}$ is fairly difficult for the underwater system.

Another difference is that high accelerations will cause turbulence in the water, and I'm not sure what affect that will have. The model we're currently using doesn't account for this, and I haven't had a chance to experiment with the general fluid model (using PDEs) yet.

Why We Care

So with all these differences, why am I bothering to give you the equations for the regular (not underwater) system? More importantly, why would I care about them for analyzing the actual system in question?

I have to admit that one of my reasons is purely pedagogical. I wanted to give you a concrete example of a system, but I didn't want to just pull out a long string of equations from nowhere, so I chose a system that is complex enough to be interesting but that still has dynamics that are simple to derive. However, there are also better reasons for caring about this system. The qualitative behaviour of this system can still be good for giving intuition about the behaviour of the underwater system.

For instance, one thing we want to be able to do is swing-up. With limited magnitudes of acceleration and a limited space (in terms of $x$) to perform maneuvers in, it won't be possible in general to perform a swing-up. However, there are various system parameters that could make it easier or harder to perform the swing-up. For instance, will increasing $I$ (the inertia of the pendulum) make it easier or harder to perform a swing-up? (You should think about this if you don't know the answer, so I've provided it below the fold.)

The answer is that higher inertia makes it easier to perform a swing-up (this is more obvious if you think about the limiting cases of $I \to 0$ and $I \to \infty$). The reason is that a higher moment of inertia makes it possible to store more energy in the system at the same velocity. Since the drag terms are going to depend on velocity and not energy, having a higher inertia means that we have more of a chance of building up enough energy to overcome the energy loss due to drag and get all the way to the top.

In general, various aspects of the regular system will still be true in a fluid on the proper time scales. I think one thing that will be helpful to do when we start dealing with the fluid mechanics is to figure out exactly which things are true on which time scales.

What we're currently using this system for is the base dynamics of a high-gain observer, which I'll talk about in a post or two.

I apologize for being vague on these last two justifications. The truth is that I don't fully understand them myself. The first one will probably have to wait until I start toying with the full underwater system; the second (high-gain observers) I hope to figure out this weekend after I check out Khalil's book on control from Barker Library.

Hopefully, though, I've at least managed somewhat to convince you that the dynamics of this simpler system can be informative for the more complicated system.

Controlling the Underwater Cartpole

Now we finally get to how to control the underwater cartpole. Our desired control task is to get to the point $\left[ \begin{array}{cccc} 0 & \pi & 0 & 0 \end{array} \right]$. That is, we want to get to the unstable fixed point at $\theta = \pi$. In the language of my last post, if we wanted to come up with a good objective function $J$, we could say that $J$ is equal to the closest we ever get to $\theta = \pi$ (assuming we never pass it), and if we do get to $\theta = \pi$ then it is equal to the smallest velocities we ever get as we pass $\theta = \pi$; also, $J$ is equal to infinity if $x$ ever gets too large (because we run into a wall), or if $u$ gets too large (because we can only apply a finite amount of acceleration).

You will notice that I am being pretty vague about how exactly to define $J$ (my definition above wouldn't really do, as it would favor policies that just barely fail to get to $\theta = \pi$ over policies that go past it too quickly, which we will see is suboptimal). There are two reasons for my vagueness -- first, there are really two different parts to the control action --- swing-up and balancing. Each of these parts should really have its own cost function, as once you can do both individually it is pretty easy to combine them. Secondly, I'm not really going to care all that much about the cost function for what I say below. I did have occasion to use a more well-defined cost function for the swing-up when I was doing learning-based control, but this didn't make its way (other than by providing motivation) into the final controller.

I should point out that the actual physical device we have is more velocity-limited than acceleration-limited. It can apply pretty impressive accelerations, but it can also potentially damage itself at high velocities (by running into a wall too quickly). We can in theory push it to pretty high velocities as well, but I'm a little bit hesitant to do so unless it becomes clearly necessary, as breaking the device would suck (it takes a few weeks to get it repaired). As it stands, I haven't (purposely) run it at higher velocities than 1.5 meters/sec, which is already reasonably fast if you consider that the range of linear motion is only 23.4 cm.

But now I'm getting sidetracked. Let's get back to swing-up and balancing. As I said, we can really divide the overall control problem into two separate problems of swing-up and balancing. For swing-up, we just want to get enough energy into the system for it to get up to $\theta = \pi$. We don't care if it's going too fast at $\theta = \pi$ to actually balance. This is because it is usually harder to add energy to a system than to remove energy, so if we're in a situation where we have more energy than necessary to get to the top, we can always just perform the same control policy less efficiently to get the right amount of energy.

For balancing, we assume that we are fairly close to the desired destination point, and we just want to get the rest of the way there. As I mentioned last time, balancing is generally the easier of the two problems because of LQR control.

In actuality, these problems cannot be completely separated, due to the finite amount of space we have to move the cart in. If the swing up takes us to the very edge of the available space, then the balancing controller might not have room to actually balance the pendulum.

Swing-up

I will first go in to detail on the problem of swing-up. The way I think about this is that the pendulum has some amount of energy, and that energy gets sapped away due to drag. In the underwater case, the drag is significant enough that we really just want to add as much energy as possible. How can we do this? You will recall from classical mechanics that the faster an object is moving, the faster you can add energy to that object. Also, the equations of motion show us that an acceleration in $x$ has the greatest effect on $\dot{\theta}$ when $\cos(\theta)$ is largest, that is, when $\theta = 0$ or $\theta = \pi$. At the same time, we expect the pendulum to be moving fastest when $\theta = 0$, since at that point it has the smallest potential energy, and therefore (ignoring energy loss due to drag), the highest kinetic energy. So applying force will always be most useful when $\theta = 0$.

Now there is a slight problem with this argument. The problem is that, as I keep mentioning, the cart only has a finite distance in which to move. If we accelerate the cart in one direction, it will keep moving until we again accelerate it in the opposite direction. So even though we could potentially apply a large force at $\theta = 0$, we will have to apply a similarly large force later, in the opposite direction. I claim, however, that the following policy is still optimal: apply a large force at $\theta = 0$, sustain that force until it becomes necessary to decelerate (to avoid running into a wall), then apply a large decelerating force. I can't prove rigorously that this is the optimal strategy, but the reasoning is that this adds energy when $\cos(\theta)$ is changing the fastest, so by the time we have to decelerate and remove energy $\cos(\theta)$ will be significantly smaller, and therefore our deceleration will have less effect on the total energy.

To do the swing-up, then, we just keep repeating this policy whenever we go past $\theta = 0$ (assuming that we can accelerate in the appropriate direction to add energy to the system). The final optimization is that, once we get past $\|\theta\| = \frac{\pi}{2}$, the relationship between $\ddot{x}$ and $\ddot{\theta}$ flips sign, and so we would like to apply the same policy of rapid acceleration and deceleration in this regime as well. This time, however, we don't wait until we get to $\theta = \pi$, as at that point we'd be done. Instead, we should perform the energy pumping at $\dot{\theta} = 0$, which will cause $\dot{\theta}$ to increase above $0$ again, and then go in the opposite direction to pump more energy when $\dot{\theta}$ becomes $0$ for the second time.

I hope that wasn't too confusing of an explanation. When I get back to lab on Monday, I'll put up a video of a matlab simulation of this policy, so that it's more clear what I mean. At any rate, that's the idea behind swing-up: use up all of your space in the $x$-direction to pump energy into the system at maximum acceleration, doing so at $\theta = 0$ and when $\dot{\theta} = 0$ and we are past $\|\theta\| = \frac{\pi}{2}$. Now, on to balancing.

Balancing

As I mentioned, if we have a good linear model of our system, we can perform LQR control. So the only real problem here is to get a good linear model. To answer Arvind's question from last time, if we want good performance out of our LQR controller, we should also worry about the cost matrices $Q$ and $R$; for this system, the amount of space we have to balance (23.4cm, down to 18cm after adding in safeties to avoid hitting the wall) is small enough that it's actually necessary to worry about $Q$ and $R$ a bit, which I'll get to later.

First, I want to talk about how to get a good linear model. To balance, we really want a good linearization about $\theta = \pi$. Unfortunately, this is an unstable fixed point so it's hard to collect data around it. It's easier to instead get a good linearization about $\theta = 0$ and then flip the signs of the appropriate variables to get a linear model about $\theta = \pi$. My approach to getting this model was to first figure out what it would look like, then collect data, and finally do a least squares fit on that data.

Since we can't collect data continuously, we need a discrete time linear model. This will look like

$x_{n+1} = Ax_n + Bu_n$

In our specific case, $A$ and $B$ will look like this:

$\left[ \begin{array}{c} \theta_{n+1} \ y_{n+1} \ \dot{theta}{n+1} \ \dot{y}{n+1} \end{array} \right] = \left[ \begin{array}{cccc} 1 & 0 & dt & 0 \ 0 & 1 & 0 & dt \ c_1 & 0 & c_2 & 0 \ 0 & 0 & 0 & 1 \end{array} \right] \left[ \begin{array}{c} \theta_n \ y_n \ \dot{\theta}_n \ \dot{y}_n \end{array} \right] + \left[ \begin{array}{c} 0 \ 0 \ c_3 \ dt \end{array} \right]$

I got this form by noting that we definitely know how $\theta$, $y$, and $\dot{y}$ evolve with time, and the only question is what happens with $\dot{\theta}$. On the other hand, clearly $\dot{\theta}$ cannot depend on $y$ or $\dot{y}$ (since we can set them arbitrarily by choosing a different inertial reference frame). This leaves only three variables to determine.

Once we have this form, we need to collect good data. The important thing to make sure of is that the structure of the data doesn't show up in the model, since we care about the system, not the data. This means that we don't want to input something like a sine or cosine wave, because that will only excite a single frequency of the system, and a linear system that is given something with a fixed frequency will output the same frequency. We should also avoid any sort of oscillation about $x = 0$, or else our model might end up thinking that it's supposed to oscillate about $x = 0$ in general. I am sure there are other potential issues, and I don't really know much about good experimental design, so I can't talk much about this, but the two issues above are ones that I happened to run into personally.

What I ended up doing was taking two different functions of $x$ that had a linearly increasing frequency, then differentiating twice to get acceleration profiles to feed into the system. I used these two data sets to do a least squares fit on $c_1$, $c_2$, and $c_3$, and then I had my model. I transformed by discrete time model into a continuous time model (MATLAB has a function called d2c that can do this), inverted the appropriate variables, and got a model about the upright ($\theta = \pi$).

Now the only problem was how to choose $Q$ and $R$. The answer was this: I made $R$ fairly small ($0.1$), since we had a very strong actuator so large accelerations were fine. Then, I made the penalties on position larger than the penalties on velocity (since position is really what we care about). Finally, I thought about the amount that I would want the cart to slide to compensate for a given disturbance in $\theta$, and used this to choose a ratio between costs on $\theta$ and costs on $x$. In the end, this gave me $Q = \left[ \begin{array}{cccc} 40 & 0 & 0 & 0 \ 0 & 10 & 0 & 0 \ 0 & 0 & 4 & 0 \ 0 & 0 & 0 & 1 \end{array} \right]$.

I wanted to end with a video of the balancing controller in action, but unfortunately I can't get my Android phone to upload video over the wireless, so that will have to wait.